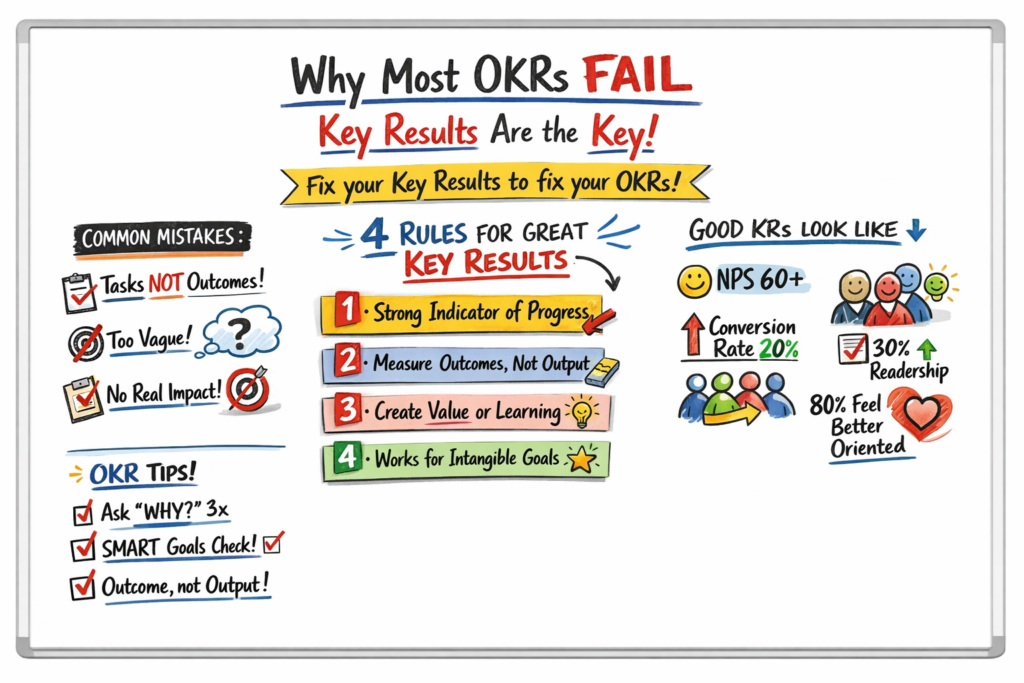

If you should remember just one thing, let it be this: well-defined Key Results are the most important part of OKRs. If you don’t nail your Key Results, the whole OKR system loses its main benefits.

In practice, most OKR failures don’t come from bad Objectives. They come from weak, task-based, or misleading Key Results.

Before we dive into examples, here are the four simple rules this article is about:

- A Key Result must be a strong indicator of progress

- Key Results measure outcomes, not output

- A good Key Result should deliver value or clear learning

- Even “intangible” Objectives need outcome-based Key Results

Let’s look at the most common mistakes—and how to fix them.

Rule #1:

A Key Result Must Be a Strong Indicator of Progress

Let’s start with a simple example:

(O) Improve user experience with our product

(KR1) Create a user survey

(KR2) Collect 1,000 responses

(KR3) Reach NPS over 60

(KR4) Increase conversion rate by 20%

The core idea is this: each Key Result should be strong evidence that you’re getting closer to your Objective.

Let’s say you already created and sent out the survey. How much did you move the needle on “improving user experience”? Probably not at all. Users are not more satisfied just because they filled in a survey.

On the other hand, reaching an NPS over 60 or increasing the conversion rate by 20% are clear signals that something meaningful changed in user behavior and perception.

So:

- Tasks like “create a survey” or “collect responses” are outputs

- Metrics like NPS or conversion rate are outcomes

Don’t mix tasks with Key Results.

One big benefit of good Key Results is that they force you to define what “improve” actually means. Objectives are often generic by nature, and that creates ambiguity. Key Results remove that ambiguity.

Rule #2:

Key Results Measure Outcomes, Not Output

Another example:

(O) Improve our internal documentation

(KR1) Readership increases by 30%

(KR2) New platform for internal documents

(KR3) 100% of documents moved to the new platform

In reality, only the first one is a good Key Result.

Launching a new platform, creating a new structure, and migrating all documents might be a lot of work. You produce a lot of output. But does it guarantee improvement? Not necessarily.

What if employees don’t use the current documentation because the content is irrelevant or confusing? A shiny new platform won’t fix that. You’ll just have the same problems in a new place.

This is a classic OKR mistake: confusing activity with impact.

In some areas—like infrastructure work, compliance, migrations, or regulatory projects—companies sometimes do use milestone- or delivery-based Key Results. Sometimes that’s unavoidable.

However, if you can express an outcome (for example: faster onboarding, fewer incidents, higher adoption, lower error rate), it is always more useful than tracking pure delivery.

Assumptions vs. Hypotheses

When we face a problem, our brain immediately jumps to solutions. That’s a great human ability—but it’s also dangerous, because solutions are based on assumptions.

In the documentation example, many people would intuitively assume:

“To improve documentation, we need a new platform.”

But what if that assumption is wrong? What if you do all this work and people still don’t use the docs?

Well-defined Key Results help you here because they:

- Force you to describe the problem more precisely

- Turn assumptions into testable hypotheses

- Shift your thinking from OUTPUT to OUTCOME

Key Results (outcomes) are your anchor. You don’t give up on them just because one solution failed.

Projects and tasks (outputs) are adjustable. If one approach doesn’t work, you try another.

At the same time, OKRs are time-boxed. Sometimes you also learn that the Objective itself was wrong or not worth pursuing—and that’s a valid outcome too.

Rule #3:

A Good Key Result Delivers Value or Clear Learning

Let’s look at a research-type objective:

(O) Research the potential for a Top Client website section

(KR1) Run 50 interviews with top clients

(KR2) Run user testing sessions with 50+ clients

(KR3) Define epics with a potential impact on 30%+ of top users

Interviews and tests generate information, which is useful—but as raw data, it usually doesn’t create value yet. A well-defined epic or developer-ready scope, on the other hand, can be tested and used quickly.

A good way to think about this:

A good Key Result should either deliver business value, or reduce uncertainty in a way that clearly enables future value.

That’s how you stay agile: small chunks of value or learning, fast feedback, and room to adapt.

Rule #4:

Even “Soft” Objectives Need Outcome Key Results

What about objectives that sound very abstract?

(O) Define and implement new company values

(KR1) Run 2 brainstorming sessions to review our values

(KR2) Each office space has at least 1 physical display of our values

(KR3) 80% of employees feel better orientation in the company

Meetings and posters don’t guarantee real business value.

But if 80% of employees feel better oriented in the company, that likely leads to better decisions and higher productivity—and that does translate into business value. Even “soft” objectives can (and should) have outcome-based, measurable Key Results.

The Practical Fix: Turn Tasks into Real Key Results

Let’s say you have this objective:

(O) Improve the filters on our website

And these Key Results:

(KR1) Analyze user behavior in the filter area

(KR2) Define new categories for our filters

(KR3) Design better UX for our filters

(KR4) A/B test new version of filters

These don’t guarantee improvement. They don’t create business value by themselves. They are outputs, not outcomes.

Now let’s rewrite them by starting with the value:

“If we are successful with this objective, the value for the business will be…”

For example:

(KR1) Increase filter usage by 20%

(KR2) Increase number of filters per search by 30%

(KR3) Increase GMV per session by 10%

Now these Key Results describe what success looks like in business terms.

One nuance: metrics can sometimes be gamed or have side effects. That’s why in practice it’s smart to combine multiple metrics (behavior, business impact, sometimes satisfaction). Still, this outcome framing is far stronger than listing tasks.

A Short Checklist for Better OKRs

- When you set an Objective, ask “Why?” three times.

- Use SMART as a sanity check, not a rigid rule:

- Specific

- Measurable

- Achievable (or deliberately ambitious)

- Relevant

- Time-bound

- And always ask:

- Does this KR strongly indicate progress?

- Is it an outcome, not an output?

- Does it create value or meaningful learning?

OKRs usually don’t fail because teams choose bad Objectives.

They fail because Key Results are written as tasks, plans, or wishful thinking.

Fix your Key Results — and most OKR problems disappear automatically.